In our fast-moving digital world, artificial intelligence (AI) has become a cornerstone of modern surveillance and facial recognition technologies. These systems can quickly analyze vast amounts of data, making them invaluable for everything from improving security to streamlining services. However, as these technologies become more widespread, several ethical concerns have come to the forefront.

Understanding these issues is crucial in ensuring that the use of AI in surveillance and facial recognition respects individual rights and freedoms.

Privacy Intrusion

One of the most pressing ethical concerns is the potential for privacy invasion. AI-driven surveillance systems can track people’s movements and activities without their consent, often in great detail. This pervasive monitoring can feel like an intrusion into personal lives, raising questions about the right to privacy. People may begin to feel like they’re constantly being watched, leading to a sense of unease and discomfort in their daily lives.

Bias and Discrimination

Another significant issue is the risk of bias and discrimination. AI systems, including facial recognition technologies, are only as unbiased as the data they’re trained on. If this data contains biases, the AI can perpetuate or even exacerbate these biases when making decisions. For example, there have been instances where facial recognition has shown to be less accurate in identifying individuals from certain racial or ethnic groups.

This can lead to unfair treatment or discrimination, affecting everything from law enforcement to job opportunities.

Lack of Consent

Many people are unaware that their images and data are being collected and analyzed by AI systems. This lack of informed consent is a major ethical issue. Individuals often do not have the opportunity to opt-out of this data collection or may not even know it is happening. This raises concerns about autonomy and the ability of individuals to control their personal information and decide how it’s used.

Accountability and Transparency

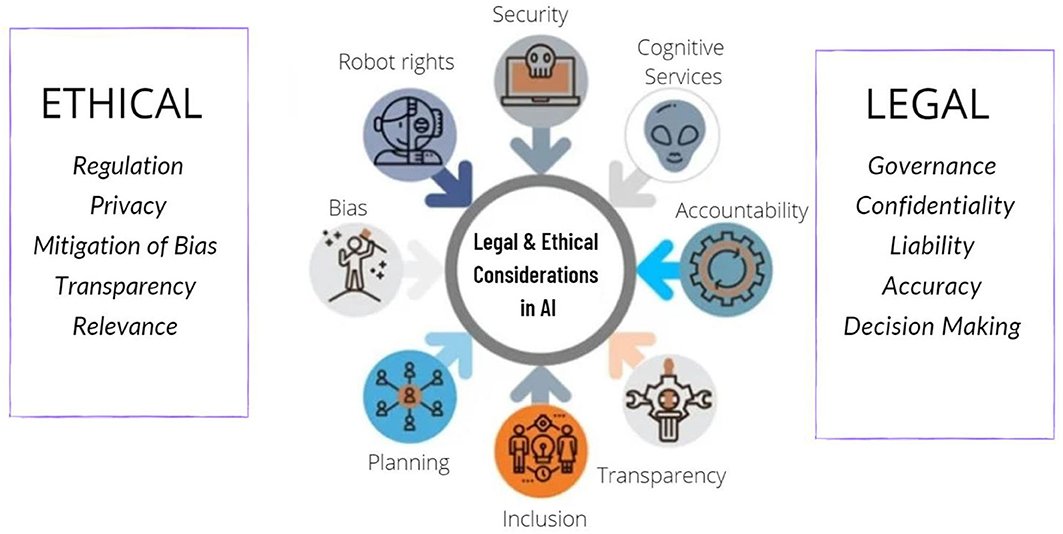

AI systems can be incredibly complex, making it difficult to understand how they make decisions. This lack of transparency can be problematic, especially when these decisions have significant consequences for individuals. Moreover, if something goes wrong—such as a wrongful identification by a facial recognition system—it can be challenging to hold anyone accountable.

Determining whether the fault lies with the technology, the data, or the people operating the system can be complicated, often leaving those affected without recourse.

Potential for Misuse

The power of AI-driven surveillance and facial recognition technologies also opens the door to misuse. Governments or other entities could use these tools to suppress dissent, monitor political opponents, or violate human rights. Without strict regulations and oversight, the potential for abuse is a significant ethical concern.

Conclusion

While AI in surveillance and facial recognition can offer benefits, such as enhanced security and efficiency, it is crucial to address the ethical concerns these technologies raise. Privacy, bias, consent, transparency, and the potential for misuse are all issues that need careful consideration. Balancing the advantages of these technologies with respect for individual rights and freedoms is essential in ensuring they are used ethically and responsibly.

As we move forward, fostering a dialogue between technology developers, policymakers, and the public will be key in navigating these ethical complexities.